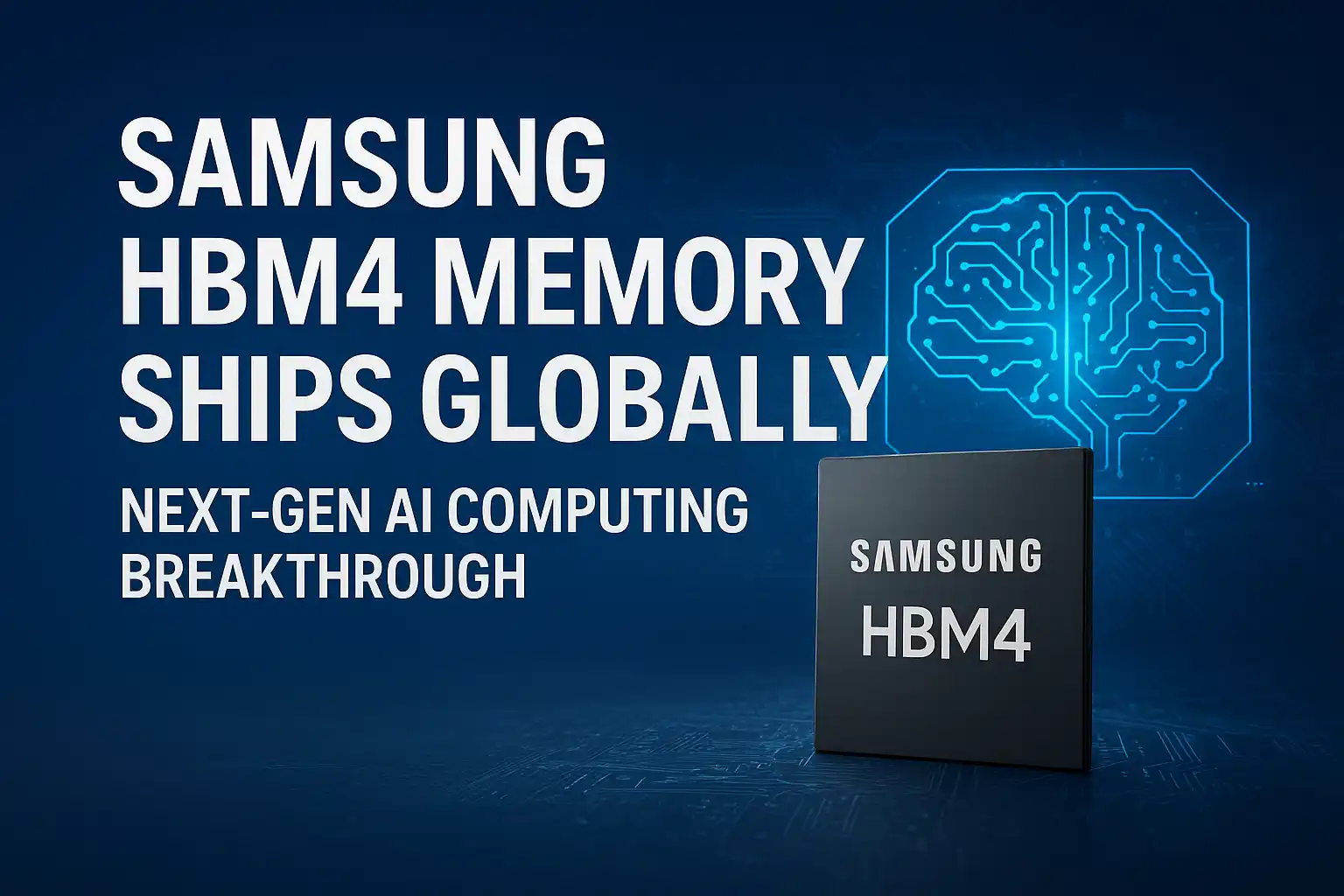

In a significant leap for the semiconductor and AI hardware industry, Samsung Electronics has officially begun the global shipment of its next-generation HBM4 (High Bandwidth Memory) chips. This milestone positions Samsung as one of the first companies to commercially deploy fourth-generation high-bandwidth memory specifically designed for AI servers, high-performance GPUs, and data center computing workloads.

Samsung claims that HBM4 offers faster speeds, larger bandwidth, enhanced energy efficiency, and improved heat management, all critical for handling the massive computational demands of AI workloads.

Understanding HBM4 Memory: What Makes It Special?

HBM4 is the latest generation of stacked DRAM memory designed to meet the needs of modern AI accelerators. Unlike traditional memory types, HBM4 is optimized for parallel data processing at ultra-high speeds, which is essential for training and running large AI models.

Key specifications include:

- High Data Transfer Speeds: Up to 13 Gbps, which is approximately 46% faster than HBM3E.

- Massive Bandwidth: Capable of 3.3 terabytes per second, enabling rapid movement of large datasets.

- Layered Architecture: Utilizes up to 16 memory layers per stack, providing up to 48GB capacity per module.

- Energy Efficiency: Advanced design reduces power consumption by ~40% compared to previous generations.

- Thermal Management: Enhanced heat dissipation and temperature resistance make HBM4 suitable for dense server environments.

In simple terms, HBM4 transforms AI computing by allowing data to flow as quickly as a superhighway, minimizing bottlenecks in GPU-based AI systems.

Why HBM4 Is Critical for AI and Data Centers

Modern AI workloads, especially large language models, image recognition systems, and generative AI models, require enormous memory bandwidth to process billions of parameters efficiently. HBM4 addresses key challenges in AI computing:

- Performance: Faster memory allows AI processors to access and process data without delays, reducing training times.

- Scalability: High-capacity stacks support larger models and datasets, essential for next-generation AI systems.

- Energy and Cost Efficiency: Lower power consumption reduces electricity costs and thermal management expenses in large data centers.

- Reliability: Better thermal performance ensures consistent operation under high workloads.

This makes HBM4 a cornerstone technology for AI infrastructure, enabling companies to train more complex AI models faster and at a lower cost.

Industry Applications and Strategic Impact

The initial shipments of HBM4 are expected to reach key AI hardware manufacturers, including NVIDIA, for integration into their next-generation AI accelerators and GPUs.

By being the first to commercially ship HBM4 memory, Samsung gains a strategic advantage in the global memory market, competing directly with:

- SK Hynix

- Micron Technology

Analysts predict that high-bandwidth memory demand will grow exponentially as AI adoption expands across cloud platforms, autonomous vehicles, robotics, and industrial AI applications.

Market and Financial Implications

Samsung’s move is expected to strengthen its position in the AI memory sector, which is becoming increasingly critical for semiconductor revenue growth. Early market projections suggest:

- Revenue Potential: HBM4 could contribute to a triple-digit growth in Samsung’s AI memory segment in 2026.

- Investor Confidence: Stock market analysts have reacted positively, viewing HBM4 as a competitive edge in high-performance computing.

- AI Ecosystem Impact: By providing high-performance memory, Samsung indirectly boosts the capabilities of AI developers, data centers, and cloud providers globally.

Looking Ahead: HBM4 and Beyond

While HBM4 represents a major technological advancement, Samsung is already planning next-generation memory solutions, including HBM4E and other ultra-high-speed memory variants. These developments are aimed at further increasing capacity, bandwidth, and energy efficiency, keeping pace with the rapid evolution of AI workloads.

Experts believe that memory innovation will become a critical bottleneck in AI hardware performance, and technologies like HBM4 will play a decisive role in determining which companies lead the next phase of AI computing.

Outcome

Samsung’s HBM4 memory shipment is not just a product launch but a strategic milestone for AI infrastructure. With AI workloads expanding across industries, high-bandwidth, energy-efficient memory is essential. HBM4 ensures that AI companies can train and deploy large models faster, more efficiently, and at scale.

This step cements Samsung’s role as a key innovator in AI memory technology, shaping the global semiconductor and AI ecosystem for years to come.

Source: Samsung news